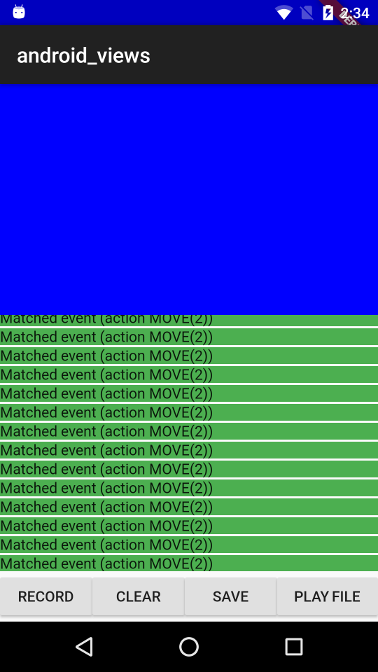

# Integration test for hybrid composition on AndroidThis test verifies that the synthesized motion events that get to embeddedAndroid view are equal to the motion events that originally hit the FlutterView.The test app's Android code listens to MotionEvents that get to FlutterView andto an embedded Android view and sends them over a platform channel to the Dartcode where the events are matched.This is what the app looks like:The blue part is the embedded Android view, because it is positioned at the topleft corner, the coordinate systems for FlutterView and for the embedded view'svirtual display has the same origin (this makes the MotionEvent comparisoneasier as we don't need to translate the coordinates).The app includes the following control buttons: * RECORD - Start listening for MotionEvents for 3 seconds, matched/unmatched events are displayed in the listview as they arrive. * CLEAR - Clears the events that were recorded so far. * SAVE - Saves the events that hit FlutterView to a file. * PLAY FILE - Send a list of events from a bundled asset file to FlutterView.A recorded touch events sequence is bundled as an asset in theassets_for_android_view package which lives in the goldens repository.When running this test with `flutter drive` the record touch sequences isreplayed and the test asserts that the events that got to FlutterView areequivalent to the ones that got to the embedded view.